LocalDocs

LocalDocs brings the information you have from files on-device into your LLM chats - privately.

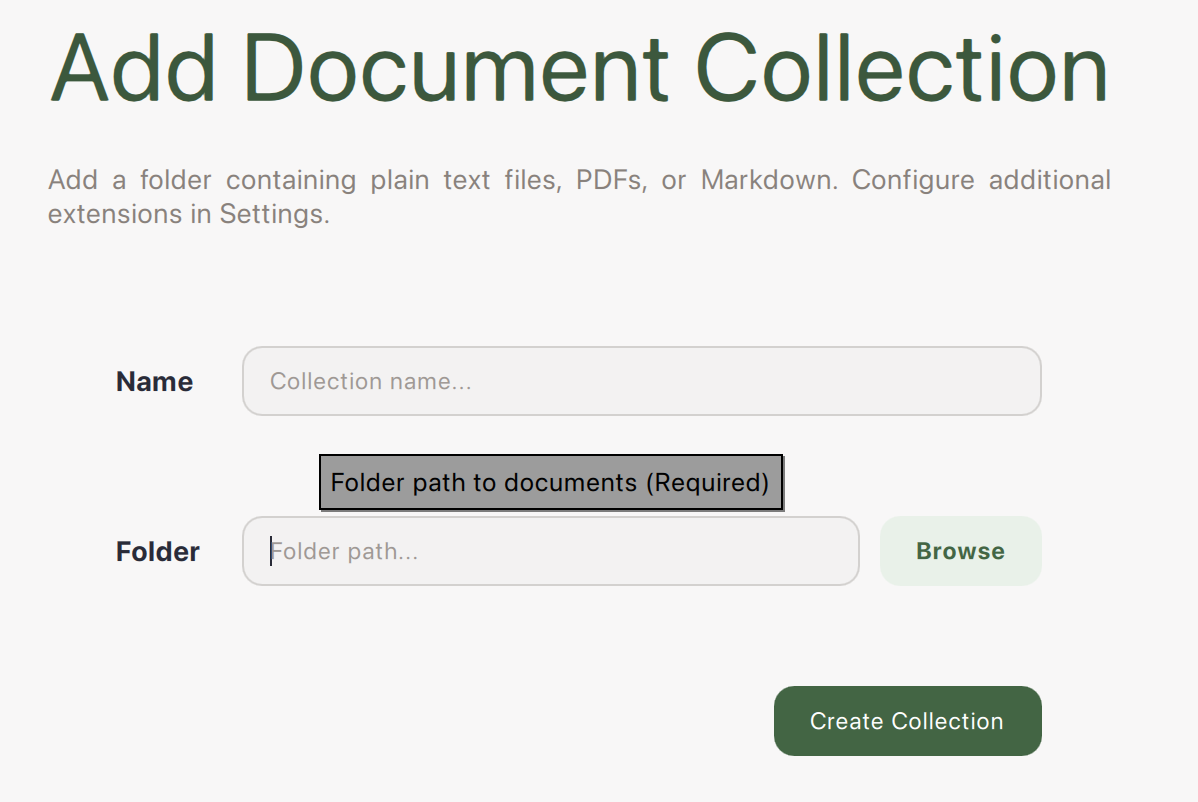

Create LocalDocs

Create LocalDocs

-

Click

+ Add Collection. -

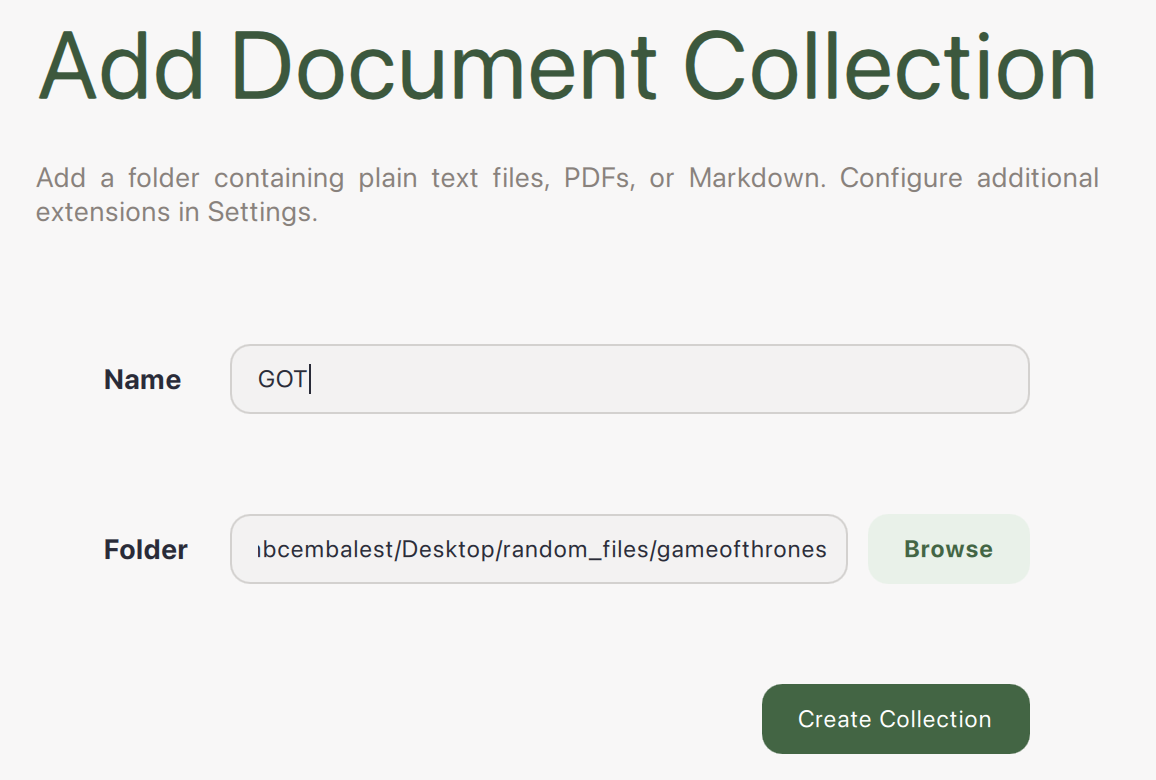

Name your collection and link it to a folder.

-

Click

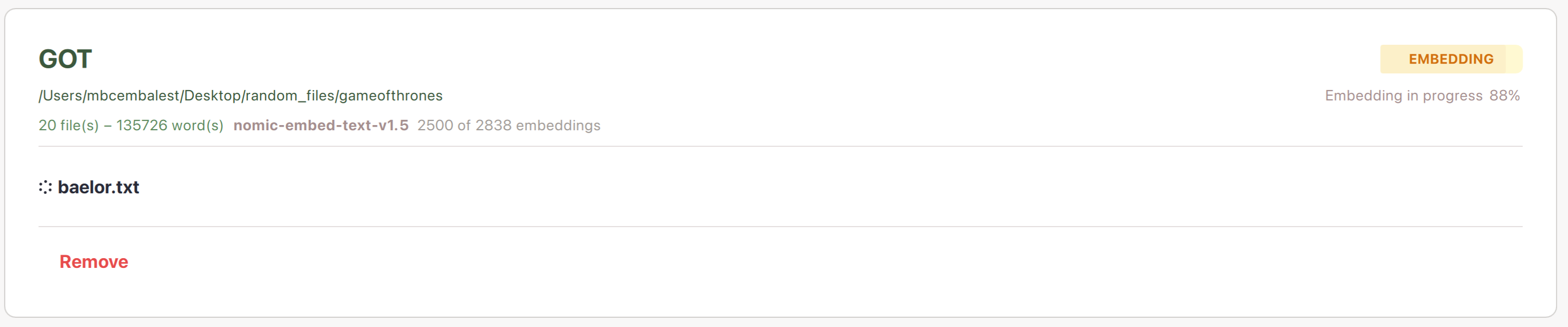

Create Collection. Progress for the collection is displayed on the LocalDocs page.

Embedding in progress You will see a green

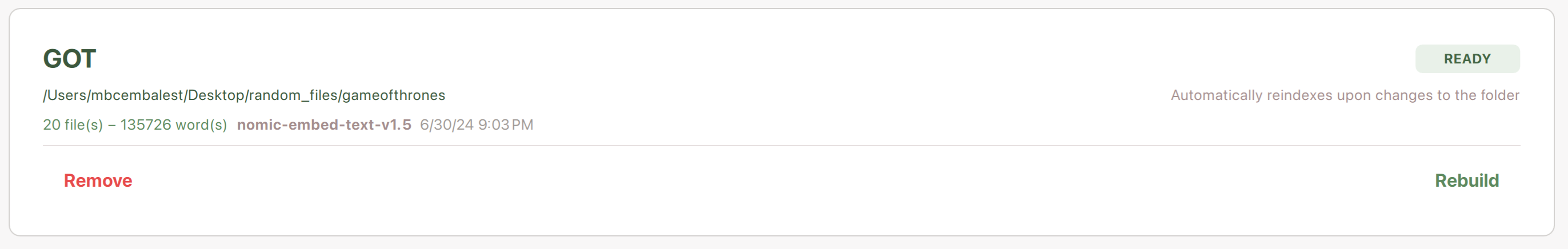

Readyindicator when the entire collection is ready.Note: you can still chat with the files that are ready before the entire collection is ready.

Embedding complete Later on if you modify your LocalDocs settings you can rebuild your collections with your new settings.

-

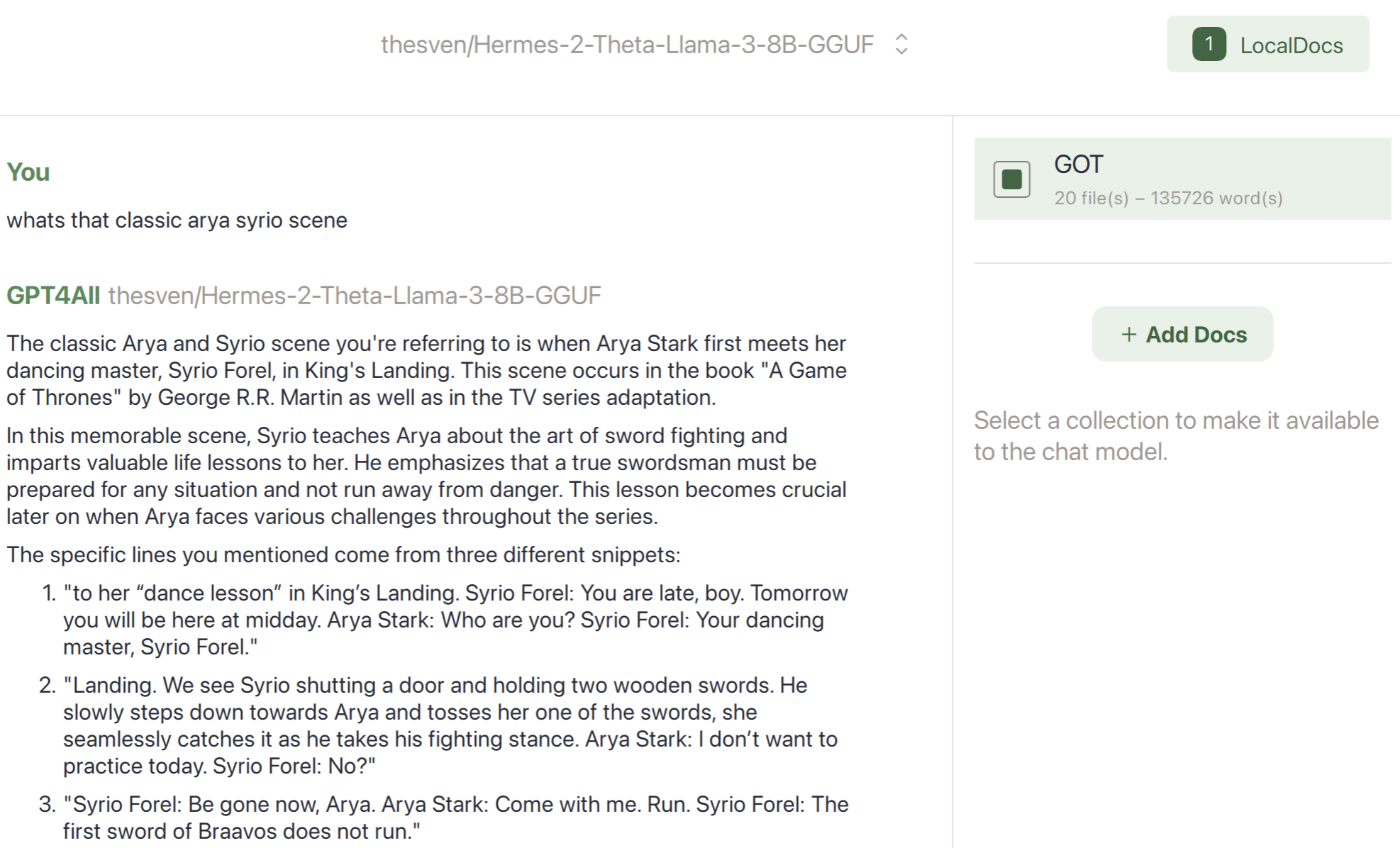

In your chats, open

LocalDocswith button in top-right corner to give your LLM context from those files.

LocalDocs result -

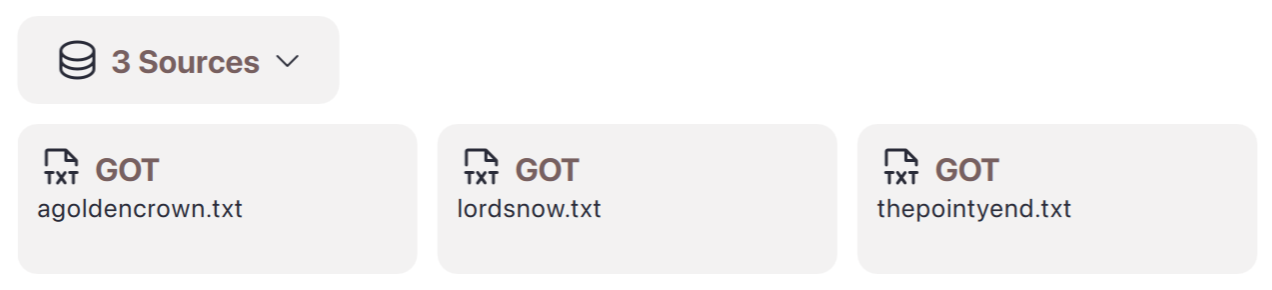

See which files were referenced by clicking

Sourcesbelow the LLM responses.

Sources

How It Works

A LocalDocs collection uses Nomic AI's free and fast on-device embedding models to index your folder into text snippets that each get an embedding vector. These vectors allow us to find snippets from your files that are semantically similar to the questions and prompts you enter in your chats. We then include those semantically similar snippets in the prompt to the LLM.

To try the embedding models yourself, we recommend using the Nomic Python SDK